Web Experiment: Rendering 3D without WebGL

After too many years developing 3D Apps using APIs like OpenGL I found myself wondering why I never did a rasterizer from scratch, creating the image pixel by pixel, using just the CPU.

It is a real useful exercise to consolidate the mental knowledge of a graphics pipeline. I even know people who use software rasterizers on their engines to solve visibility issues or as a fallback option for some extreme situations (for instance, 3D on the web on Internet Explorer! ha ha).

So today I started my own rasterizer, and I choose Javascript, which is not the best context when searching for performance., but I was surprised to see that three.js has its own build-in raster which amazing results, so, why not?

If you are curios about how it is done, continue reading the entry.

First any 3D engine needs a bunch of basic matrix operations, which have to be well optimized for performance issues. For this part I didnt want to waste my time coding a propper matrix class (I have done it before and It isnt interesting at all) so I grabbed the library glMatrix, which its know to have good performance and having just the basic needs for matrix manipulations and some basic functions to construct 3D Matrices.

Second you need a framebuffer where to render all the primitives, for that purpose in the web there is only one option, using the HTML5 Canvas element, which would be helpful to simplify the first steps thanks to its basic rendering functions (lines, shapes).

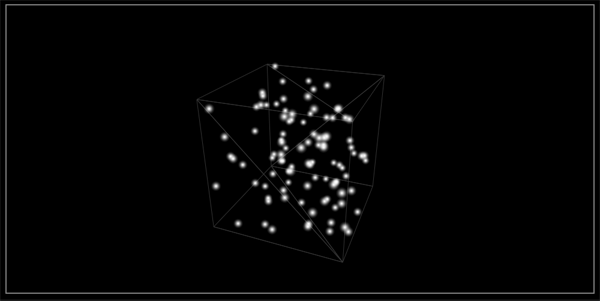

Then I needed some data to render, I started creating an array with a points’ cloud inside a range.

function Mesh() { this.vertices = []; this.faces = []; }; Mesh.prototype.createCloud = function(size, num) { num = num || 100; this.vertices = []; this.vertices.length = num; for(var i = 0; i < num; ++i) this.vertices[i] = [(Math.random() * 2 - 1) * size, (Math.random() * 2 - 1) * size, (Math.random() * 2 - 1) * size]; }

Then creating the matrices for the projection of 3D coordinates to the 2D buffer and setting the parameters of that projection:

this.projection = mat4.create(); this.view = mat4.create(); this.vp = mat4.create(); this.mvp = mat4.create(); this.viewport = [0,0,canvas.width, canvas.height]; var box = new Mesh(); box.createCube(20); this.camera = { fov: 45, eye: [0,50,100], center:[0,0,0], up: [0,1,0], };

at the beginning of every frame, I create the view and projection matrix according to the parameters of the camera:

enableCamera: function(camera) { var aspect = camera.aspect || (this.viewport[2] / this.viewport[3]); mat4.perspective(camera.fov, aspect, 1, 1000, this.projection ); mat4.lookAt( camera.eye, camera.center, camera.up, this.view); mat4.multiply(this.projection, this.view, this.vp); this.perspective_factor = this.viewport[2] / Math.tan( camera.fov * Math.DEG2RAD ); //used for point rendering }

And for every vertex of my 3D shape, I apply the transformation from local coordinates to screen coordinates (this can be highly optimized):

projectPoint: function(transform, v) { //from local to normalized screen coordinates var tmp = [0,0,0]; mat4.multiplyVec3(transform, v, tmp); tmp[0] /= tmp[2]; tmp[1] = (1.0 - tmp[1]) / tmp[2]; //from normalized to screen coordinates tmp[0] = (tmp[0] + 1) * ( this.viewport[2] * 0.5 ) + this.viewport[0]; tmp[1] = (tmp[1] + 1) * ( this.viewport[3] * 0.5 ) + this.viewport[1]; //tmp[0] = Math.round(tmp[0]+0.5); tmp[1] = Math.round(tmp[1]+0.5); return tmp; }

And thats mostly all, I project every vertex and renders it to the canvas using moveTo and lineTo functions.

There are lots of features missing, from basic polygon ordering to eliminate objects behind the camera, but that can wait.

The performance is pretty good and the quality is better than expected.

The next step will be to ignore the Canvas rendering functions and do the pixels by myself using a ArrayBuffer, then finally dumping the image to the canvas.

We will see 🙂

Friday, May 25th, 2012 @ 7:31 pm

Friday, May 25th, 2012 @ 7:31 pm