First a little comment about RenderToTexture. My RTs are based in FBOs (Frame Buffer Objects), thats a feature relatively new on OpenGL and not well supported by all the graphics card.

Yesterday I was testing my app under OSX where I have everything installed (I used Macport to install python and all the libraries) and it crashed because the FBOs were not supported. Thats impossible because it is a MacBook and I know the card supports it, but for some reason the OpenGL functions relative to FBOs are not pointing to the right place on the driver, I don’t know what to do to solve it.

I also tested it in my other computer which runs Windows 7, and pygame crashes due to a problem with MSVCR71.dll, I guess is a SDL problem and Visual Studio, the funny part is that it works when I run the script from python IDLE. Weird. So at the end using python and opengl is not as cross platform as I thought.

My friend Miguel Angel pointed out also something curious, he had the same problem as Bastiaan when calling one of the OpenGL function, but that problem didn’t occour to me, it has to be something with the versions we are using, so I guess the Pyopengl API is not so solid and it is changing between versions more than what I would like, that could be a problem in the future, we will see…

PostFX

Today I’m gonna try to code a simple PostFX shader to see if I’ve got the basics.

The wrapper I did for the shaders is too simple right now, it doesnt support to upload textures to the shader, and that is mandatory, so thats my first enhacement. Also I haven’t test loading and showing a texture, indeed I coded the RT class without having a Texture class first (usually RT inherits from Texture).

I was expecting to find a class that encapsulates all the boring part of a texture, like texture formats, filters, etc, but I wasnt so lucky.

I’m worried about something, I don’t want end up doing the same I do everytime I start coding in a new language/platform. I always start wrapping the things I need, and keep doing it till I have been doing technical stuff so long that I get bored, instead of creating the application I create the tools, and the application never comes… It is a death spiral where I always fell. In game development there is a saying: “those who code game engines never code a game”, and it is true, as soon as you start coding your own engine you are always chasing a new feature instead of trying to create something with the features you already have.

So my plan now is to create the ultrabasic needs, which will be to have textures, shaders, RTs, maybe meshes (something simple) and a tiny graph editor, but I will talk about this when the time comes.

Ok, so I made a PostFX shader, I added the function to upload a texture to a shader and for my first test I rendered the same scene but reversing the colors and it worked.

gl_FragColor = vec4(1,1,1,1) – texture2D(texture,gl_TexCoord[0].st);

I was thinking of leaving that as an example of PostFX but that was too simple so I thought about something more complex.

Where you are coding a PostFX shader you read the pixel you are going to write from a texture, then you apply a function using the color of the pixel as the input and the output is returned from the pixel shader to be written in the same position of that pixel.

But sometime ago I realized that when it comes to PostFX you usually want to read not only that pixel, also the average of the pixels around that one, or in other words, you want to blur the pixel. To do that there are several options, one is to read all the pixels around your pixel from the texture and calculate the average, but for prototyping that is tedious, other solution is to blur the texture before passing it to the shader, but then if you want the original one you need two textures.

So I found a trick, to use the Mipmaps. Textures have mipmaps which are versions of the same texture but in lower resolution, and OpenGL allows you to create on-the-fly mipmaps of a RenderTexture. It is kind of slow because it has to calcule all the mipmaps piramid even when you only want two or three levels, but the good thing is that it is only one line of code, so I added the option to the RenderTexture class.

I have used this trick in the past to create lens effect, motion blur, depth of field, etc. Obviously the results are not perfect in comparison with a good gaussian blur, but it does the task.

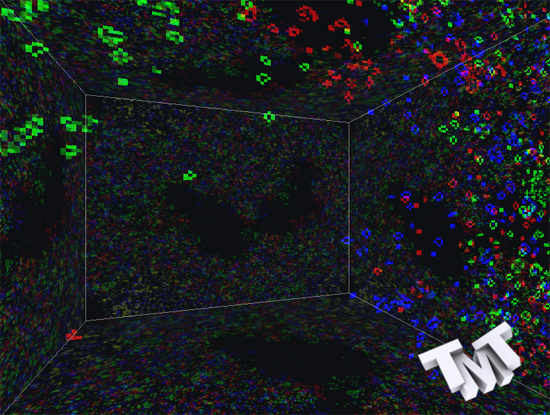

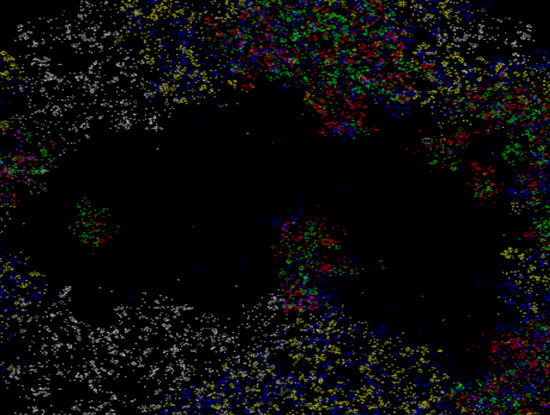

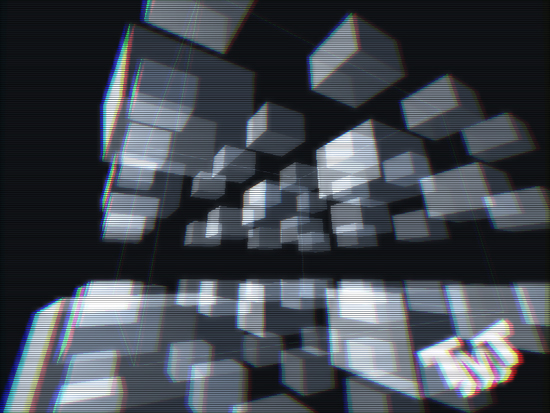

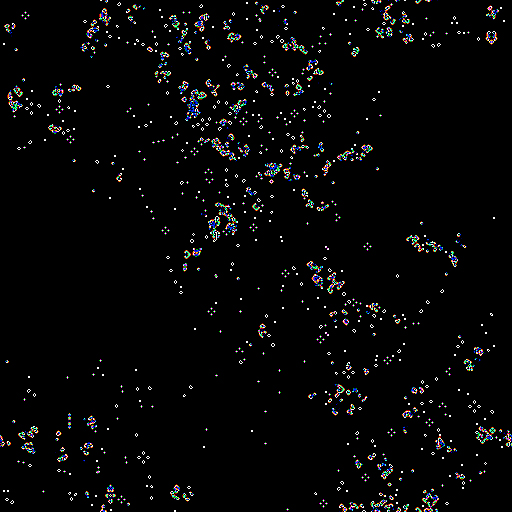

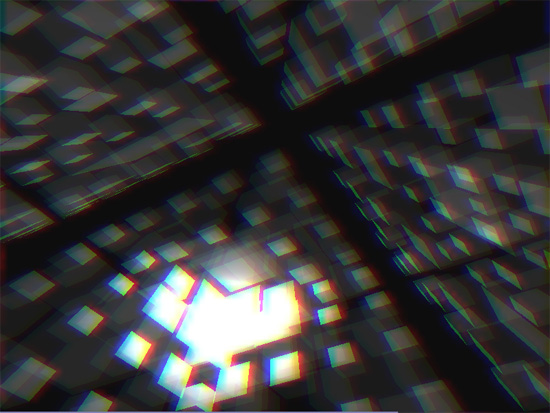

For this example I read the channels of the pixel separatly, with a little offset in X and I also add some blur to the red and blue component. Here are the results:

And here is the source code: hackpact day 3