I’m three days behind, I know, but I’ve been coding hard last days, but I never found time to write in the blog about it, and also I wanted to have a nice version without bugs before I share it here.

So what I’ve been coding these days? Well, I pushed away the old code about cubes and cellular automatas, and started something new from scratch, but first I refactor a little bit my code to create a classic class in almost every interactive application, the Application class.

This class encapsulates the ugly and boring code common to all Applications, like creating the window, the main loop, reading the input, calculating the elapsed time, quitting the app in a clean way, and some minor stuff.

When refactoring I tryed to use as much python tricks as I could, not just the regular C++ syntax, I followed some nice tutorials were they explain how to take advantage of the python features to reduce the amount of code, this particularly was preaty useful: Python Tips, Tricks, and Hacks

This translates to a better use of lists, parameters in funcions, and iterations in general.

I even added some exceptions to avoid leaving the window open if the application crashes, that was anoying.

So I refactor my old code to make it really simple to create an application from scratch, here is one example:

#!/usr/local/bin/python

from OpenGL.GL import *

from OpenGL.GLU import *

from GLTools import *

from shaders import *

from Application import *

WINDOW_SIZE = [800,600,False]

class MyApp(Application):

def init(self):

glDisable( GL_CULL_FACE )Â Â Â Â Â Â Â

self.logo_tex = Texture()

self.logo_tex.load("data/tmt-logo.png")

def render(self):

glClearColor(0.0, 0.0, 0.0, 1.0)

glClear(GL_COLOR_BUFFER_BIT|GL_DEPTH_BUFFER_BIT)

glBlendFunc(GL_SRC_ALPHA,GL_ONE)

glEnable(GL_BLEND)

glColor3f(1.0,1.0,1.0)

self.logo_tex.render([-1,1],[2,-2])

def update(self, time_in_ms):

pass

app = MyApp()

app.createWindow("My App", WINDOW_SIZE, WINDOW_SIZE[2] )

app.start()

The formating here is a little bit messed up but you can download the file from the sourcode file at the end of this entry.

Then I decided to create an application in 2D, I’m a little bit tired of 3D cubes and I don’t plan to create a mesh loader for the moment, for now I want to focus in other ideas, more headed to pictures and basic shapes.

I remembered and old idea about creating something similar to a canvas where to draw in a Photoshop way. I like to use Photoshop to do illustration but sometimes I have ideas of brushes that can’t be done with the features of photoshop (maybe in the latest versions they have added them).

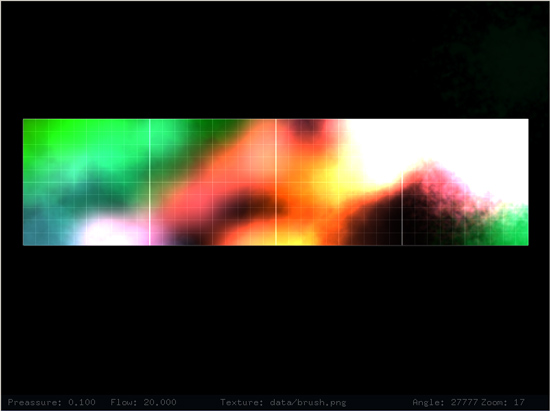

The idea is to create a RenderTexture and to use it as a canvas, then to draw texturized quads on the RT when painting, they can be paint using blending to achive cool overlay effects, and I have complete freedom to resize, rotate or do other tricks on the brush. Creating a canvas was easy and that was done two days ago pretty quickly once I had the Application class to handle the mouse and keyboard events.

But I thought it didn’t had too much interest, mostly because the tool barely had any feature that couldnt be done in photoshop.

The next day I spend some time playing with the app and adding some common features like saving the image to disk, the Undo option, having different brushes, controling the alpha and the repetition, etc.

I have some ideas in mind for the future, for starters, I want to create special brushes, that behave like they have their own life (auto-brushes in advance), then I want to create a big canvas, not just the one I have now, something bigger composed by several RTs, and leave the auto-brushes wandering around, drawing strange shapes.

I also have more features that I would like to add, like having layers ‘a la photoshop’, sharing the canvas online like in webcanvas, or having a small class to do on-the-fly coding for the brushes.

Lot’s of ideas in this field to explore…

Problems found

During the refactoring I found some anoying problems related to python and OOP, mostly because some internal behaviours. For instance, all the brush instances where sharing the same list instance for the textures, instead of having their own due to have initialized the list on the body of the class, not in the constructor.

Other issues where related to openGL. Dealing with RTs based in FrameBuffer Objects is simple in concept but tricky in practice, mostly because it can behave erratic under some systems. My friend Miguel Angel is having some issues with the openGL code in his machine, and I’m having some in mine.

Also, I had a pixel resolution problem when doing the canvas. If the brush paints too much quads in the same region it is easy to overdraw the same zone quickly which doesnt looks nice, then the solution is to draw quads with a small alpha so the color increases slowly, but this has a problem, if the brush alpha is too small and the texture has also alpha or values closer to zero, when both values are multiplied and stored in the RT, there is not enough pixel resolution and they are clamped to the closest value, creating ugly artifacts.

The solution is obvious, increase the resolution for the RT, instead of having 8bits per channel (the usual) I changed the RT code to support more formats, like 16bits or 32. This was tricky because I don’t know how they behave in different cards, and my first surprise was when I tested in my home computer, it was running at 2 frames per second, just because my GForce 6600 doesnt like too much the RGB32F format. I made some fixes to use 16bits but I got dissapointed that drawing a quad in a 32bits texture could reduce the performance to 2 fps.

I had more problems but now I don’t remember, probably because they weren’t too much important.

Screenshots

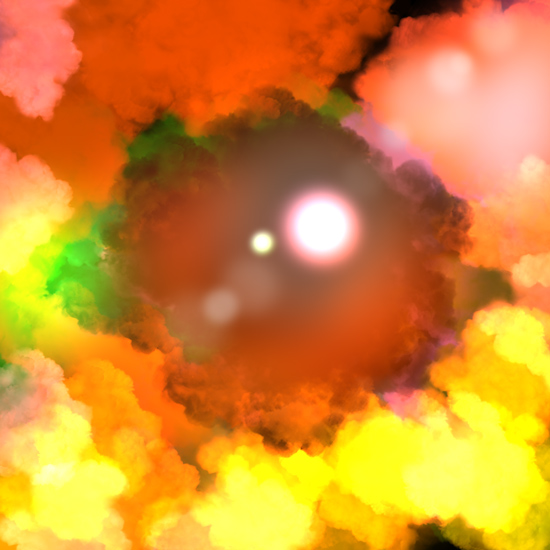

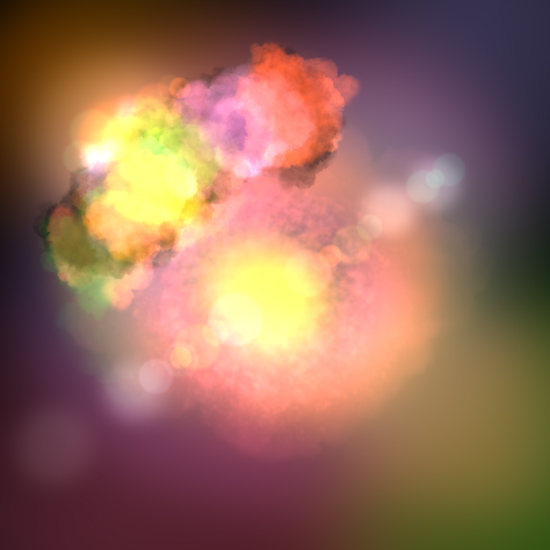

Here are some of my artistic results, I created some brushes in photoshop but I enjoy playing more with the plain ones.

Code

There are lots of keys so here is a list:

- 1-5 to change between brushes

- Control Z to Undo

- Control S to save to disk

- Keypad / and * to control flow

- Keypad + and – to control preassure

- Keypad . to change between white and rainbow color

- C to clearthe buffer

- Mouse Wheel to control the brush size

- Shift Mouse Wheel to control brush rotation

You can download it from here: hackpact day 6